Power Usage Effectiveness (PUE) is one of the most important metrics in data centre operations today. Defined as the ratio of total facility power to IT equipment power, a lower PUE indicates that your facility is using less energy for non-computing functions, such as cooling, lighting, and power distribution.

Yet many facilities with excellent server hardware and advanced cooling systems still struggle with inefficient airflow. One of the most overlooked contributors to poor PUE is the absence or misuse of blanking panels.

Today, we’ll explain how blanking panels directly impact cooling efficiency and offer actionable ways to use them to reduce your PUE, often with immediate results.

What Is PUE and Why Does It Matter?

PUE = Total Facility Power / IT Equipment Power. For example, if your data centre consumes 1,500 kW total, and your IT equipment uses 1,000 kW, your PUE is 1.5. This means for every watt used by your servers, you’re spending 0.5 watts on cooling and infrastructure overhead.

According to Uptime Institute, the global average PUE is around 1.58, but high-performing centres push closer to 1.2 or even 1.1. Every decimal point counts, especially at scale, because energy costs account for 25–40% of operational expenses.

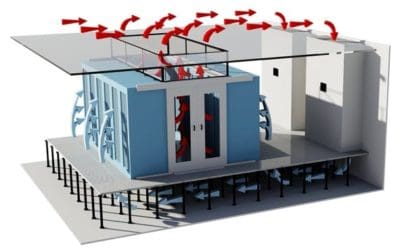

How Poor Airflow Increases PUE

One of the most common causes of inflated PUE is recirculation of hot exhaust air into the cold aisle due to open gaps in server racks. These gaps allow hot and cold air to mix, making it harder for cooling systems to maintain target temperatures.

The result?

- Fans work harder

- Chillers consume more power

- Equipment experiences temperature swings

- Cooling systems become less efficient

This is where blanking panels come in.

What Are Blanking Panels and How Do They Work?

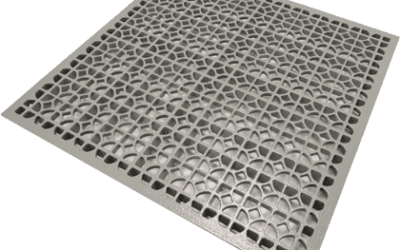

Blanking panels are solid inserts installed in unused rack spaces to block airflow between the front (cold) and rear (hot) sections of a server cabinet.

When installed correctly, they:

- Prevent hot air recirculation

- Maintain a cold aisle/hot aisle containment strategy

- Allow cooling systems to operate more efficiently

EziBlank® offers modular blanking panels that snap into standard 19″, 21″, and 23″ racks, enabling full thermal isolation even in non-standard configurations.

Direct Impact on Cooling Efficiency

Here’s how blanking panels specifically reduce your cooling load:

1. Better Cold Air Utilization

Without blanking panels, cold air is wasted as it leaks into open rack spaces. This causes hotspots in active equipment zones, triggering cooling units to overcompensate.

By directing all cold airflow to the servers that need it, blanking panels ensure:

- Consistent inlet temperature across equipment

- Reduced bypass airflow

- Optimized supply air delivery

2. Less Overcooling Required

When cold air leaks or mixes with hot return air, facilities tend to lower ambient temperature setpoints to compensate. This drives up cooling energy usage unnecessarily.

With properly installed blanking panels and brush solutions like the EziBlank® 1RU Brush Panel, you can maintain higher setpoint temperatures without risk, reducing compressor runtime and fan speeds.

3. Balanced Air Pressure and Flow

Sealing unused rack spaces with blanking panels helps maintain static pressure under raised floors, especially when used alongside floor grommets. This allows airflow tiles to function more precisely, delivering the right amount of air to each rack zone.

PUE Reduction in Real Numbers

Let’s say your facility has:

- 100 server racks

- 30% average open RU space without panels

- The cooling system accounts for 40% of the total power draw

By implementing EziBlank® panels in all open RUs, airflow is fully directed to working equipment, reducing the required cold air supply by up to 25%.

This could lower your PUE from 1.58 to 1.45, a 9% improvement in total energy efficiency. Multiply that by your monthly power bill and you’re looking at real savings.

Why EziBlank® Panels Are Optimized for Efficiency

Not all blanking panels deliver the same impact. EziBlank® panels are:

- Tool-free for rapid deployment

- UL94-V0 flame-retardant for safety

- Snappable and modular, allowing reuse and dynamic adjustment

- Scratch-resistant, preserving performance and aesthetics long-term

Their precision fit and lightweight construction ensure there are no gaps for airflow leakage, making them ideal for high-efficiency environments.

Improve Your Strategy with Supporting Products

While blanking panels provide foundational airflow control, they work best in a holistic airflow strategy that includes:

- Flex Brush Panels for sealing rack bottom cavities

- Floor Tiles for targeted directional cooling

- Containment Walls to separate hot and cold aisles completely

This layered approach gives you control at every stage of the airflow cycle, from underfloor distribution to rack-level sealing.

Common Misconceptions to Avoid

“I only need blanking panels in front.”

False. Front and rear containment must be considered together. Even if you use front panels, poor rear sealing (or floor gaps) will still allow thermal crossover.

“Panels are only necessary in new builds.”

Wrong. Blanking panels are even more critical in legacy or mixed-equipment environments where rack utilization varies. Modular options are perfect for retrofits.

“They’re a small fix, not a big impact.”

Incorrect. Blanking panels are a small investment with large ripple effects across your cooling system, server reliability, and total energy usage.

Final Thoughts: Lowering PUE Starts with Smart Airflow Control

Blanking panels are a simple, affordable, and fast-to-deploy solution that directly contributes to a lower PUE and improved cooling efficiency. When paired with other containment strategies, they can make a measurable difference in both energy consumption and hardware performance.

At EziBlank®, we’ve helped data centres around the world reduce their PUE with precision-engineered airflow products that are easy to install, reusable, and built for modern infrastructure.

Ready to improve your airflow efficiency? Explore our full range of blanking panels and supporting solutions to start optimizing your environment today.