AI workloads are redefining the thermal design requirements of data centres. When clusters consist of thousands of GPUs operating simultaneously, heat becomes a performance constraint, not just a facilities concern. The scale of energy consumption, thermal output, and infrastructure density involved in training large language models (LLMs) and generative AI applications has introduced new fault lines in traditional cooling strategies.

High-density GPU environments can exceed 30kW per rack, and in many cases, operate continuously over days or weeks. Managing this heat load requires a coordinated approach across rack design, airflow containment, monitoring systems, and passive cooling components. Failures in any layer contribute to uneven intake temperatures, recirculation, thermal throttling, or worse, full compute job rollbacks.

Rack-Level Heat Management Begins with Airflow Integrity

Thermal inefficiencies often originate at the most granular level, the rack. In AI deployments, any unused rack space becomes a point of vulnerability for recirculated exhaust air. This return airflow undermines cold aisle temperatures, allowing hot air to flow into intake paths and creating conditions for GPU throttling or system-wide imbalance.

High-performance facilities rely on passive airflow control to eliminate these weak points. Blanking panels, particularly those designed for tool-free, modular installation, are used to seal all unused rack units and maintain pressure integrity across the cold aisle.

In AI clusters where racks are frequently reconfigured, passive solutions such as EziBlank® blanking panels provide flexibility without compromising thermal performance. The panels support consistent intake temperatures by reducing cold air leakage and preventing hot air from short-circuiting into sensitive zones.

Preventing Recirculation in Dense GPU Rows

As power density increases across racks, the cooling strategy must also address airflow behavior at the row level. Recirculation isn’t always obvious; it can occur between adjacent racks, around uneven tiles, or through cable penetrations in floors and ceilings. Over time, even minor inefficiencies manifest as hotspot formation and airflow turbulence, which cooling units cannot always compensate for.

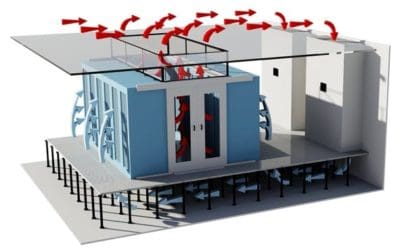

AI data centres address this by building controlled airflow zones using hot aisle or cold aisle containment. These physical barriers ensure separation between intake and exhaust air, thereby increasing the effectiveness of cooling systems and reducing the need for overprovisioning airflow.

Modular wall-mounted systems, such as the EziBlank Wall, are deployed in high-density AI environments to create custom containment structures. Their role is to adapt to layout changes while preserving thermal boundaries across complex infrastructure.

Airflow Challenges Unique to AI Training Environments

In traditional enterprise workloads, cooling systems are designed for variability. AI clusters introduce a different load profile, constant, high-power draw over long durations, often with minimal idle time. This makes airflow consistency as important as cooling capacity.

Distributed training jobs can span hundreds or thousands of GPUs simultaneously. If any part of the cluster experiences thermal instability, the training process slows down or fails. The risk isn’t just elevated temperatures; it’s non-uniformity. GPUs operating outside optimal thermal bands reduce model FLOPS utilization (MFU), extend time-to-completion, and trigger unnecessary checkpoints or recoveries.

The Nebius whitepaper illustrates this risk. In a 3,000-GPU cluster, even modest differences in thermal reliability led to over 30 hours of saved compute time when the infrastructure reduced interruptions and improved airflow conditions. Blanking panels and modular airflow sealing were part of the foundational design.

Monitoring and Optimisation with AI-Powered Thermal Intelligence

While airflow design prevents thermal instability, monitoring ensures the environment remains predictable under variable workloads. In AI-focused data centres, operators increasingly rely on real-time thermal analytics, using digital twins to simulate and respond to airflow anomalies in real-time.

Platforms like EkkoSense are integrated into AI deployments to visualise airflow, model pressure gradients, and detect points of cooling inefficiency. When paired with physical airflow control strategies, including containment and modular blanking, the feedback loop becomes more effective. Operators can proactively correct airflow issues before they impact job reliability.

These monitoring systems provide more value when baseline airflow discipline is already in place. Passive solutions reduce noise in thermal telemetry, enabling AI models to predict issues based on actual load variations rather than chaotic airflow behavior.

Designing AI Facilities for Thermal Stability, Not Just Capacity

Thermal optimization in high-density GPU environments is not a reactive process. It begins with infrastructure planning and continues with consistent enforcement of airflow standards.

Data centres that serve AI workloads at scale are designing with containment in mind from the outset. Tool-free blanking panels, modular containment systems, sealed cable entries, and raised floor airflow management are standard components, not retrofit solutions. This approach prevents heat from becoming the limiting factor in AI infrastructure growth.

For deployments with evolving hardware footprints or rapid AI workload scaling, tailor-made airflow solutions help facilities engineers adapt without compromising thermal integrity.

What Heat Management Tells Us About AI Infrastructure Maturity

Heat is not just a byproduct of compute; it’s a signal of how well infrastructure design supports workload demands. In GPU-intensive environments, where training time directly impacts project costs, thermal reliability becomes a competitive differentiator.

The most advanced AI data centres are not cooling harder; they’re controlling airflow more precisely. Solutions that remove variability and recirculation at the physical layer allow cooling systems and monitoring platforms to operate within defined performance bands. The result is greater uptime, better GPU utilization, and reduced cost per training cycle.

Passive airflow control is not a legacy approach. It is the foundation on which hyperscale AI performance is built.